In this blog post, I am going to share my experience working with Single Sign-On (a product from Red Hat) and will provide the detail information and instructions on how to configure different enterprise java applications with Single Sign-On using OpenID Connect (OIDC). This blog can be used by anyone who is interested in Single Sign-On implementation and be used by any developer to get started with Single Sign-On implementation quickly as possible.

This blog post will explain the high-level architecture (end-to-end request flow among applications), integration of SSO with JBoss EAP and BPM Suite, enabling SSO in Continuous Integration/Delivery and configuration of LDAP, AD and Kerberos for SSO.

Single Sign-On Implementation in Java

For securing application, the Red Hat Single Sign-On (RH-SSO) provides following options.

- OpenID Connect (OIDC)

- SAML

- OAuth 2.0

Red Hat Single Sign-On comes with supports of SAML, OAuth 2.0 and OpenID Connect Connect out of the box.

OpenID Connect (OIDC) vs SAML

OIDC is a new protocol supported by Red Hat Single Sign-On comparing to old fully developed SAML protocol. Although SAML is proven and secure with supporting loads of use cases, however, in most cases, OIDC is still recommended. In this blog, we will use OIDC which is an extension to OAuth 2.0.

Architecture - End-to-End Application Request Flow

How does Single Sign-On Authentication work?

Single Sign-On provides the mechanism for authorization and authentication which enables users to authenticate once against one of our applications and doesn’t require to authenticate again for other applications.

Red Hat has released the Single Sign-On standalone product which provides the seamless integration and federated authentication for enterprise applications.

When applications are secured by Single Sign-On, a user is only required to authenticate the first time they access any of the secured resources. Upon successful authentication, the roles associated with the user retrieves from a local database (or external shared database, if using) and used for authorization of all other associated resources. This allows the user to access any further authorised resources without re-authenticating. After user logs out from an application, or an application invalidates the session programmatically, the process starts over.

The high level architecture request flow among different applications secured with Red Hat Single Sign-On is explained in figure below.

The end-to-end application request flow follows the similar pattern as any other SSO product. Any user tries to access an application first time without any valid authentication token will be redirected to Single Sign-On login page. Once user login successfully, the SSO token (containing token string along with user roles and other information) will need to set in a request header and passed to other applications.

Since the applications are already configured with SSO server, the SSO server first validates the session token from the incoming request and authenticates the user.

In case, the token expire or the user is not authorized to access the application, the Single Sign-On server will response with 401 unauthorized error code and user will be redirected to login page where user can obtain a valid token again.

A sample code to pass authentication token to other application is illustrated in example below.

private static void invokeServiceWithToken(HttpServletRequest req,

String uri) {

CloseableHttpClient httpClient = null;

try {

httpClient = HttpClients.createDefault();

HttpGet get = new HttpGet(uri);

KeycloakSecurityContext session = (KeycloakSecurityContext) req

.getAttribute(KeycloakSecurityContext.class.getName());

if (session != null) {

get.addHeader("Authorization", "Bearer " +

session.getTokenString());

}

HttpResponse response = httpClient.execute(get);

} catch (Exception e) {

throw new RuntimeException(e);

} finally {

if (httpClient != null)

try {

httpClient.close();

} catch (Exception e) {

throw new RuntimeException("Error closing

HttpClient", e);

}

}

}

For more concrete examples, follow the link mentioned in section “Example Projects”.

The application flow explained in above Figure illustrate an example flow using different applications deployed in EAP, BPM Suite and Fuse, however, this can be generalised to any number of enterprise applications.

Single Sign-On Installation

The Red Hat Single Sign-On product can be downloaded from the RedHat. We need RedHat account with active subscription to download this license server. The Open Source version is available to download from here.

We will first download the Red Hat Single Sign-On server and will start the server with default configuration. However, we may need to change the default port for the server in case we are starting on the same host machine where other EAP servers are also running.

To change the default port to something else, add the appropriate JBoss binding offset in the standalone (or domain.xml) configuration.

<system-properties>

<property name="jboss.socket.binding.port-offset" value="1600"/>

</system-properties>

After server start up successfully, visit the URL http://localhost:9680/ to ensure the Red Hat Single Sign-On server is running.

By default, the Red Hat Single Sign-On Server comes with the H2 database which is used for storing realm and user data. However, we can also configure an external database service. For instance, we need to configure the external database, if we are running in a cluster environment. Red Hat Single Sign-On supports loads of different databases like Oracle, MySql, PostgreSQL etc and the latest updates for the same list can be referred from Red Hat documentation.

The first thing, after installing server, would be to create an admin user account to manage the Red Hat Single Sign-On server.

To create admin user, use the URL http://localhost:9680/auth

After creating an admin user, we now log into the server and start playing with Red Hat Single Sign-On Server configuration. To login into Red Hat Single Sign-On Server, use the URL http://localhost:9680/auth/admin/

Realm

Realms are used to authenticate domain applications and are used to restrict access to the resources of different applications. Each realm is mapped to a domain and we can add as much as Realms for different domains. Red Hat Single Sign-On provides the default Master Realm and Admin user can use this to manage other realms. We can add multiple realms by using the Realms tab through the Administrator console. We can also configure different realms with different authentication protocol. For instance, Realm A can use OIDC protocol while Realm B can support SAML as an authentication mechanism. Similarly, each realm can be configured and associated to different LDAP or AD for user authentication.

Master Realm vs Application Realm

The master realm in Red Hat Single Sign-On is an exceptional realm and should handle differently compared to other application specific realms. The best practice is to create a separate new realm for securing application while keeping the Master Realm for management purpose only. Master Realm best be used as a place to create and manage other realms in our system.

Creating Realm and User

To create a new Realm, follow the link http://localhost:9680/auth/admin/ and use “Add Realm” option.

The detail steps found on the following link: creating a realm

Create new user by click “Users” menu and then “Add User”. This user is used for authentication and authorization for configured application which can be a web application, web service or simple mobile based application.

User Login and Logout

Since the user is managed against the realm, therefore, to login with the specific user, make sure the URL contains the correct realm.

For example, to login with user associated with “test” realm, the URL should be: http://localhost:9680/auth/realms/test/account.

Themes and Custom Login Page

Red Hat Single Sign-On Server comes with the default theme and login page. It is possible to create a custom login page specific to an application and configure/deploy in Red Hat Single Sign-On server. The server can also be themed for providing users with a seamless experience. Please refer to Red Hat documentation for more information.

Integrating Single Sign-On with JBoss EAP

Overview

This section explains the integration and configuration of Red Hat Single Sign-On with JBoss EAP. We assume that EAP is running in a standalone mode and therefore, we will modify standalone.xml file for the configuration. However, we need to either modify standalone-ha.xml or domain.xml depending on our operating mode.

Client Adapter

To secure application with Red Hat Single Sign-On, we need to install Red Hat Single Sign-On client adapters. The client adapter provides the tight integration of Red Hat Single Sign-On with any platform. It enables application to communicate and be secured by Red Hat Single Sign-On.

To begin, we will download the client adapter from RedHat website (account with active subscription is required). We need to choose the right version of adapter depending on the platform we are using. We install the client adapter using the simple CLI command as explained here

Register Application with Single Sign-On Server

Any application that needs to be secure with SSO, it has to be registered with Single Sign-On Server. We will first create and register the client using the Red Hat Single Sign-On admin console.

Login the admin console and use the “Clients” tab to register the client application. Please refer to the Red Hat documentation for detail steps on creating and register a new client application.

After registering the client, we configure the client installation using either the adapter subsystem configuration into the standalone.xml or Per WAR Keycloak configuration subsystem.

To configure using adapter subsystem configuration, open the standalone/configuration/standalone.xml file and add the following text:

<subsystem xmlns="urn:jboss:domain:keycloak:1.1">

<secure-deployment name="TestApp.war">

<realm>testrealm</realm>

<auth-server-url>localhost:9680/auth</auth-server-url>

<public-client>true</public-client>

<ssl-required>EXTERNAL</ssl-required>

<resource>Test</resource>

</secure-deployment>

</subsystem>

Please note that we have to replace WAR name with the actual application WAR name. This will secure the application using the adapter subsystem as opposed to securing it through web.xml file inside each application WAR.

Please also note that we need to either change standalone-ha.xml or domain.xml depending on our operating mode.

To find detail information about how to secure application using “Per WAR Keycloak configuration”, please refer to the guideline here

Per WAR Keycloak vs Adapter Subsystem configuration

With Red Hat Single Sign-On, there are two ways to secure application.

- Per WAR Keycloak configuration

- Adapter Subsystem configuration

“Per WAR Keycloak configuration” requires adding config and editing files for each WAR separately. However, with the “Adapter Subsystem Configuration”, the changes need to be done only in standalone.xml file (or domain.xml).

To secure multiple application wars with Red Hat Single Sign-On, all the changes need to be performed in standalone.xml (or domain.xml) file. In the scenario where we want to automate the deployment process to different host environments, the “Adapter Subsystem configuration” provides better flexibility compared to “Per WAR Keycloak configuration” method as it is easy to configure environment variables in standalone.xml (or domain.xml) compared to each application WAR. Moreover, the standalone.xml (or domain.xml) is generally already configured to support different environments (System Test, UAT, Performance Test etc) and therefore, we may not need to perform any extra effort to accomplish this.

Single Sign-On and Continuous Integration

In this section, we will discuss the life cycle and explain how to enable the continuous integration or continuous delivery process with Single Sign-On.

Installation, Deployment, Configuration and Management

With Red Hat Single Sign-On, we can use any automation tool to enable CI/CD process. For example, I have used GoCD as Continuous Integration and continuous delivery process.

Figure below also explains the general continuous integration flow which can be implemented for any CI or CD tool like Jenkins, TeamCity or Hudson etc. In addition, we can also use deployment management tools like Ansible to automate the servers installation and infrastructure. However, this is not mandatory for CI process as installation or building infrastructure can be a onetime process.

Please note that the step 1, 2 and 3 in figure below either be done in Parallel or can follow any order.

Step 1 is very basic in any CI process which builds the development projects and generates the required artifacts in order to deploy in Application Servers.

Step 2 simply installs the client adapter on EAP, BPM Suite and Fuse.

Step 3 explains the life cycle process including installation, administration and management of Red Hat Single Sign-On server.

We start with installing Red Hat Single Sign-On server. After installing server successfully, we perform some administration works. This includes configuring a Master Realm as well as creating an admin user. We also create application specific realm. Please note that we also use Master Realm for applications authentication, however, the good practice is that we should keep Master Realm for administration and management of other realms while application specific Realm for applications authentication.

Thereafter, we create and register client in Red Hat Single Sign-On server. To automate with CI process, I found “Adapter Subsystem Configuration” much better as this provides better flexibility to deploy on to different environment on the fly without changing any host URL or configuration manually. Therefore, after registering client, we also configure application using “Adapter Subsystem configuration” method to secure it with Red Hat Single Sign-On server.

Lastly, Step 4 deploys the apps in the respective application servers. Please note that if we are installing using “Per WAR Keycloak configuration” method, we may need to add keycloak.json in each application WAR artifact.

This is a general high-level request flow going across different application servers. This could easily be generalised for any kind and number of applications.

Configuring LDAP and Active Directory for Single Sign-On

Red Hat Single Sign-On supports LDAP and Active Directory users out of the box and supports integration with LDAP and Active Directory configuration for using external user databases. It works by importing the user on demand to its local database. We can always configure how to update the user information.

To configure with Red Hat Single Sign-On, click “User Federation” and then choose “Add Provider”. The below screen shots provide the detail options that is required to integrate with LDAP. Please use your LDAP configuration values and click “Sync all users” or “Sync changed users” to load/update the user information.

More information about this be found here

By default, Red Hat Single Sign-On server import the LDAP users into the local database. To make sure that user information is up-to-date and sync with the LDAP server, we can use “Sync Setting” to configure synchronisation.

Integrating Single Sign-On with JBoss BPM Suite

We assume that JBoss BPM Suite is already installed and running. To configure JBoss BPM Suite with Single Sign-On, we will follow the steps below:

- Install the client adapter on top of JBoss BPM Suite. The client adapter can be installed in a similar way as explained in section “Integrating Single Sign-On with JBoss EAP”.

Next, we will modify the standalone.xml as example below,

<subsystem xmlns="urn:jboss:domain:keycloak:1.1">

<secure-deployment name="business-central.war">

<realm>testrealm</realm>

<realm-public-key>ABC</realm-public-key>

<auth-server-url>localhost:9680/auth</auth-server-url>

<ssl-required>EXTERNAL</ssl-required>

<resource>kie</resource>

<credential name="secret">a7b4-35ea5d3dcc63</credential>

<principal-attribute>preferred_username</principal-attribute>

<enable-basic-auth>true</enable-basic-auth>

</secure-deployment>

<secure-deployment name="kie-server.war">

<realm>testrealm</realm>

<realm-public-key>ABC</realm-public-key>

<auth-server-url>localhost:9680/auth</auth-server-url>

<ssl-required>EXTERNAL</ssl-required>

<resource>kie-execution-server</resource>

<credential name="secret">bd57-fe1ae4781649</credential>

<principal-attribute>preferred_username</principal-attribute>

<enable-basic-auth>true</enable-basic-auth>

</secure-deployment>

</subsystem>

Please note that we need to replace realm, realm-public-key and secret credential values with our actual realm values.

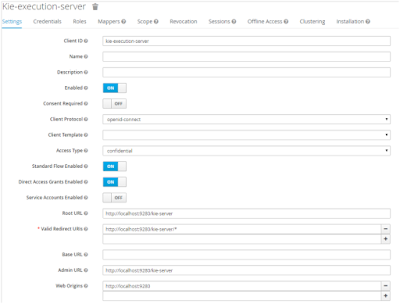

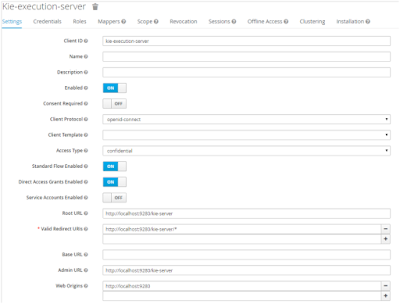

- The realm client for Business Central and kie-server in Red Hat Single Sign-On as shown in the figure below:

/>

/>

After performing the above changes, if we try to access Business Central, it should redirect to Red Hat Single Sign-On login screen. We can also secure the business central remote engine services API with Red Hat Single Sign-On. The detailed explanation can be referred here

Token Endpoints API

To generate the new token with Red Hat Single Sign-On server, we use any of the following:

- Use the

curl command to create a new token as below.

curl GET -d "client_id=frontend" -d "username=mali" -d "password=mystrongpassword" -d "grant_type=password" http://localhost:9680/auth/realms/TestRealm/protocol/openid-connect/token

- Use any

Rest based tool to generate token. The screen shot below is from POSTMAN

Please refer to API documentation regarding API details.

Example Projects

There are some example projects available on GitHub which helps to quickly demonstrate the Red Hat

Single Sign-On integration with

java applications. These projects are available

here

/>

/>